DOWNLOAD THE ENTIRE FEBRUARY 2017 NEWSLETTER including this month’s Freebie .

.

Although few of us spend time contemplating the molecular messengers at work in our brain, we owe a tremendous amount to them—and to dopamine in particular. It plays a part in movement, motivation, mood and memory. But it also has a dark side. The neurotransmitter is implicated in addiction, schizophrenia, hallucinations and paranoia. Yet dopamine is best known for its role in pleasure. In the popular press, dopamine is delight; the brain’s code word for bliss; the stuff that makes psychoactive drugs dope. Articles and documentaries describe dopamine as what makes life worth living, the chemical that permits every enjoyable moment to be savoured, the “hit” everyone is chasing whether through social media, psychoactive substances, sports, food, sex or status.

But it may be time to rethink these ideas. Nora Volkow, director of the National Institute on Drug Abuse and a longtime researcher of this neurotransmitter, says that dopamine is not the pleasure molecule in the simple, direct way it is typically portrayed in the media. Its function is apparently much more nuanced.

Today the precise nature of dopamine is a matter of much controversy. Some researchers argue that dopamine, when acting within what has become known as the brain’s reward system, signals desire. Others claim that it helps the brain predict rewards and direct behaviour accordingly. A third group splits the difference, saying both explanations can be valid. Ironically, if there is anything scientists now agree on about this neurotransmitter it is that dopamine does not neurologically define joy. Instead this little molecule may unlock the intricate mystery of what drives us.

Missing Motivation

In 1978 Roy Wise, then at Concordia University in Quebec, published a seminal paper on dopamine. He depleted levels of the neurotransmitter in rats with antipsychotic medications and found that the rats would stop working to receive yummy foods or desirable drugs such as amphetamine. The animals could still make the movements needed to obtain what they should have craved, which suggested that their behaviour changed because the experience was no longer rewarding. Dopamine, at least when acting in a circuit located near the middle of the brain, seemed to be necessary for anything to feel good.

Over the next decade, data in support of Wise’s idea only grew. So when neuroscientist Kent Berridge began researching dopamine around that time, he believed, like most of his colleagues, that it was a “pleasure” signal. Berridge’s own work was focused on facial expressions of pleasure, which are surprisingly congruent among mammals. Even rats will avidly lick their lips when they receive sweet food and open their mouth in disgust after encountering a bitter taste—as will human babies. Typically mammalian expressions of satisfaction intensify when, for example, a hungry rat receives an especially tasty treat or a thirsty rat finally drinks water. Berridge thought that studying and measuring these responses could further confirm the idea that dopamine means pleasure to the brain.

His colleague at the University of Michigan, Terry Robinson, had been using a neurotoxin to destroy dopamine neurons and create rats that modelled severe symptoms of Parkinson’s. Berridge decided to give sweet foods to these rodents and see if they appeared pleased. He expected that their lack of dopamine would deny them this response. Because they were so dopamine-depleted, Robinson’s rats rarely moved if left alone. They did not seek food and had to be fed artificially. Unexpectedly, however, their facial reactions were completely normal—they continued to lick their lips in response to something sweet and grimace at a bitter meal.

His colleague at the University of Michigan, Terry Robinson, had been using a neurotoxin to destroy dopamine neurons and create rats that modelled severe symptoms of Parkinson’s. Berridge decided to give sweet foods to these rodents and see if they appeared pleased. He expected that their lack of dopamine would deny them this response. Because they were so dopamine-depleted, Robinson’s rats rarely moved if left alone. They did not seek food and had to be fed artificially. Unexpectedly, however, their facial reactions were completely normal—they continued to lick their lips in response to something sweet and grimace at a bitter meal.

They tried again and again, but Berridge and his colleagues got the same results. When they conducted an experiment that basically created the opposite conditions—by ramping up dopamine levels in rats using electrodes implanted in appropriate regions—the rats did not lick their chops more eagerly when eating, as the “dopamine is pleasure” theory predicted. Indeed, sometimes the animals actually seemed less pleased when they scoffed down their sweets. Nevertheless, they kept eating far more voraciously than normal.

The researchers were puzzled. Instead of producing pleasure, dopamine seemed to drive desire. Desire itself can be enjoyable in small doses—but in the long run, if it is not satisfied, it is just the opposite. Eventually Berridge and Robinson realised that the pleasure involved in seeking a reward and that of actually obtaining it must be distinct. They labelled the drive that dopamine seemed to induce as “wanting” and called the joy of being satiated, which did not seem to be connected with dopamine, “liking.”

This dissociation fit with studies of Parkinson’s patients. They are still, after all, able to enjoy life’s ups but often have problems with motivation. Perhaps the most vivid example of this occurred in the early 20th century, when an epidemic of encephalitis lethargica left thousands of people with an especially severe parkinsonian condition. Their brains were so depleted of dopamine that they were unable to initiate movement and were essentially “frozen in place” like living statues. (The film Awakenings, which starred Robin Williams as neurologist Oliver Sacks, was based on the doctor’s 1973 memoir of treating such patients.) But a sufficiently strong external stimulus could spark action for people with this condition. In one case cited by Sacks, a man who typically sat motionless in his wheelchair on the beach saw someone drowning. He jumped up, rescued the swimmer and then returned to his prior rigidly fixed position. One of Sacks’s own patients would sit silent and still unless thrown several oranges, which she would then catch and juggle.

This dissociation fit with studies of Parkinson’s patients. They are still, after all, able to enjoy life’s ups but often have problems with motivation. Perhaps the most vivid example of this occurred in the early 20th century, when an epidemic of encephalitis lethargica left thousands of people with an especially severe parkinsonian condition. Their brains were so depleted of dopamine that they were unable to initiate movement and were essentially “frozen in place” like living statues. (The film Awakenings, which starred Robin Williams as neurologist Oliver Sacks, was based on the doctor’s 1973 memoir of treating such patients.) But a sufficiently strong external stimulus could spark action for people with this condition. In one case cited by Sacks, a man who typically sat motionless in his wheelchair on the beach saw someone drowning. He jumped up, rescued the swimmer and then returned to his prior rigidly fixed position. One of Sacks’s own patients would sit silent and still unless thrown several oranges, which she would then catch and juggle.

A class of medications known as dopamine agonists are used to re-enable movement and motivation. Dopamine receptors perceive these drugs as the real thing and react accordingly. Consequently, the medications can offer excellent relief from tremors, rigidity and other movement problems. But the drugs can also have some destructive and distressing side effects. Some patients go from essentially not having enough motivation to having too much—or, at least, the motivation that the drug ignited was misdirected. In addition to overeating, problems on dopamine agonists can include gambling, obsessions (e.g with an iPhone), compulsive online shopping and intrusive sexual desires. Subjectively, the experiences described by patients are nearly identical to those reported by people with more typically caused addictions.

Many people with addictions experience an escalation in desire that, similarly, is not accompanied by a similar increase in enjoyment. This is what Berridge and Robinson call the “incentive sensitisation” theory of dopamine action, which they introduced in 1993 and which has been bolstered by more recent studies. In 2005, for example, a research team tracked the brain activity of eight people with an addiction to cocaine as they pushed a button to self-administer the substance. In line with Berridge’s “wanting” theory, activity along dopamine pathways peaked just before button pushing.

Many people with addictions experience an escalation in desire that, similarly, is not accompanied by a similar increase in enjoyment. This is what Berridge and Robinson call the “incentive sensitisation” theory of dopamine action, which they introduced in 1993 and which has been bolstered by more recent studies. In 2005, for example, a research team tracked the brain activity of eight people with an addiction to cocaine as they pushed a button to self-administer the substance. In line with Berridge’s “wanting” theory, activity along dopamine pathways peaked just before button pushing.

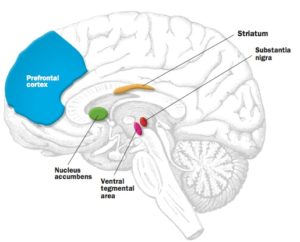

What dopamine does is to take the things you encounter, for example, little cues, things you smell and hear, and if they have a motivational significance, it can magnify that significance. This then raises the incentive to pursue them. Berridge found that placing dopamine directly into the nucleus accumbens of rats, will make them work two to three times harder to get what they crave, but it will not amplify the pleasurable experience of rewards once they are obtained.

Prediction Engines

More recently, other researchers have focused on a different function for dopamine in the brain’s motivational systems. They say that the brain uses dopamine in these regions not so much as a way to spur behaviour through wanting but as a signal that predicts which actions or objects will reliably provide a reward. It encodes the difference between what you’re getting and what you have expected. This is known as the “reward prediction error” theory of dopamine.

In a series of experiments begun in the 1980s, Wolfram Schultz and his colleagues showed that when monkeys first get something pleasant—in this case, fruit juice—their dopamine neurons fire most intensely when they drink the liquid. But once they learn that a cue like a light or a sound predicts the delivery of delicious stuff, the neurons fire when the cue is perceived, not when the reward is received. This response changes when the value of the reward shifts. If a reward is bigger or better than expected, the dopamine neurons fire more in response to this happy surprise; if it is nonexistent or smaller than anticipated, dopamine levels crash.

In a 2016 study, Schultz and his colleagues asked 27 participants undergoing magnetic resonance imaging to look at a computer screen with a series of rectangles, each representing a “range” of money (for example, £0 to £100), without specific values indicated. A crosshair landed somewhere along a rectangle to indicate a cash prize. In several trials, people would guess at and then (virtually) receive the corresponding amount. Meanwhile the researchers tracked activity in select dopamine hotspots. They found that activity in the substantia nigra and ventral tegmental area, neighbouring regions in the midbrain, was linked to people’s prediction errors—whether they were pleasantly surprised or disappointed by the prize. In addition, the activity in this area over the course of the experiment related to how well participants adapted their estimates as they gained insight from past mistakes. Schultz therefore sees dopamine as playing a role in how we learn what to seek and what to avoid.

In this view, dopamine does not signify how pleasant an experience will be but how much value it has to the organism at that particular moment. Schultz notes that dopamine neurons do not distinguish among different types of reward. They are only interested in the value and don’t care whether it is a food reward, liquid reward or money. They are specific about the prediction error, but they don’t care what the reward is.

Schultz suggests dopamine serves as a common currency system for desire. For example, when the brain receives a signal that the body needs water, the value of water for that individual at that time should rise. Because this makes a cold drink more attractive, quenching thirst will be prioritised, avoiding dehydration. Yet, Schultz explains, “if I fall in love, then all my other rewards become relatively less valuable.” A glass of water will pale in comparison to a chance to be with the beloved.

From this perspective, it is easy to see why dopamine would be critical to addiction. If drugs or other compelling pleasures alter the way the reward system determines what is valuable, the addictive behaviour will be given top priority and motivation will shift accordingly. Seeing dopamine this way can also explain a number of psychological phenomena. Consider how people typically prefer a smaller reward now to a bigger one later, what economists term “delay discounting.” This shift occurs because as rewards recede into the distant future, they are far less powerful than those that are just about to be received—and are represented by progressively lower amounts of dopamine.

Moreover, if dopamine codes reward prediction error, it could also account for the so-called hedonic treadmill, that sadly universal experience in which what initially makes us ache with desire, over time becomes less alluring, requiring a greater intensity of experience, new degree of novelty or higher dose to achieve the same joy. (You buy a new car, but driving it soon becomes routine and you start to crave a fancier one.) According to reward prediction error theory, when there is no prediction error—when something is just as pleasant as expected, no more or less—dopamine levels do not budge. But your current pleasure may increase expectations for the next experience, the prediction error is less high and your reaction is less strong. (This logic would also confirm the 1965 hypothesis by Mick Jagger et al. regarding the low probability of getting long-term satisfaction!!)

Moreover, if dopamine codes reward prediction error, it could also account for the so-called hedonic treadmill, that sadly universal experience in which what initially makes us ache with desire, over time becomes less alluring, requiring a greater intensity of experience, new degree of novelty or higher dose to achieve the same joy. (You buy a new car, but driving it soon becomes routine and you start to crave a fancier one.) According to reward prediction error theory, when there is no prediction error—when something is just as pleasant as expected, no more or less—dopamine levels do not budge. But your current pleasure may increase expectations for the next experience, the prediction error is less high and your reaction is less strong. (This logic would also confirm the 1965 hypothesis by Mick Jagger et al. regarding the low probability of getting long-term satisfaction!!)

Other researchers have begun putting Schultz’s ideas to the test. In 2016, neuroscientist Read Montague and his colleagues published findings involving 17 people with Parkinson’s who had brain implants that could measure changes in dopamine in the striatum, another midbrain area linked to rewarding experiences. They found that dopamine signalling might be even more nuanced than making a simple calculation that compares experience with expectations.

In the experiment, the patients played a game that involved betting on a simulated market. While playing, they considered the possible outcomes of various choices and later evaluated their decisions based on what had actually occurred. Here the dopamine signals that were recorded did not track a simple reward prediction error. Instead they varied by how the bets came in compared with how the investment would have fared if they had chosen differently. In other words, if someone won more than she expected but could have won even more if she had made a different choice, she had less dopamine release than if she had not known there was a way she could do even better.

In addition, if someone lost a few dollars but could have lost a lot more if he had made a different choice, dopamine would rise somewhat. This finding explains why knowing that “it could have been worse” can make what would otherwise feel awful into a positive—or at least less dire—experience.

Putting It All Together

Although some scientists view the reward prediction error theory and Robinson and Berridge’s incentive sensitisation theory as incompatible, they do not directly falsify each other. Indeed, many experts think that each captures some element of the truth. Dopamine might signal wanting in some neurons or circuits and could signify reward prediction error in others.

Alternatively, these functions may operate on different timescales—as suggested by a 2016 study in rats conducted by colleagues of Berridge and Robinson. The study, published in Nature Neuroscience, found that changes in dopamine levels from second to second were congruent with dopamine as an indicator of value, which supports the reward prediction error hypothesis. Longer-term changes, over the course of minutes, however, were linked with changes in motivation, which bolsters the incentive sensitisation theory. Our feelings, in a sense, are decision-making algorithms that evolved to guide behaviour toward what was historically most likely to promote survival and reproduction. Pleasure can cue us to repeat activities such as eating and sex; fear drives us away from potential harm. But if the brain regions that determine what you value go askew, it can be extremely difficult to change your behaviour because these areas will make you “want” to continue and will also make the addictive behaviour “feel” right.

Daniel Weintraub, a psychiatrist at the University of Pennsylvania, says often Parkinson’s patients develop impulse-control disorders while taking dopamine agonists, which is roughly 8 to 17 percent of people taking such drugs. The fact that stopping these drugs can end addictive behaviour so abruptly and decisively shows how critical dopamine is in driving it.

Although dopamine can modulate our drives, it is not the only determinant of what we do and what matters to us. Ultimately what we humans seek and value is a little more complicated than our fleeting desires.

REFERENCES

■ Awakenings. Oliver Sacks. Duckworth, 1973.

■ Dopamine Reward Prediction Error Coding. Wolfram Schultz in Dialogues in Clinical Neuroscience, Vol. 18, No. 1, pages 23–32; March 2016.

■ Parkinson’s Disease Foundation: www.pdf.org

■ The Currency of Desire. Maia Szalavitz in Scientific American Mind, volume 28, number 1, pages 48-53; January/February 2017.